How to Choose the Right BigQuery Storage Pricing Model (Logical vs Physical) to Save up to 60%

Csaba Kassai

6 min read

BigQuery offers two storage pricing models (Logical and Physical) and most teams stick with the default without realizing they could be overpaying. The solution? Understand how each model works, analyze your data’s compression and change patterns, and switch to the optimal model for each dataset. In this guide, you’ll learn how to evaluate both storage pricing models, measure the difference for your workloads, and implement changes that can significantly reduce your storage costs – without touching a single query.

Why does storage pricing model choice matter?

The right model depends on your data’s characteristics, and choosing incorrectly can mean paying substantially more than necessary.

- If your data compresses well and changes infrequently (fewer versions stored in the time travel window), Physical often wins.

- If your data changes frequently during the time travel period or compression is modest, Logical may remain better.

The savings are real. Data from Rabbit users shows that teams using the optimal storage billing model for each dataset save 27.5% on BigQuery storage costs on average. In fact, the highest saving we saw with our clients was as much as 60%.

What’s the difference between Logical and Physical storage pricing?

The two models calculate your storage costs in fundamentally different ways:

- Logical storage charges based on the uncompressed, logical size of table data.

- Physical storage charges based on the actual compressed bytes BigQuery stores on disk. This includes active data and, importantly, the bytes retained for time travel and fail-safe recovery.

In practice, Physical can be cheaper even if the list price per GiB is higher, because compressed bytes for columnar data can be far smaller than logical bytes. However, with Physical, you’re also paying for time travel and fail-safe bytes, which means the net effect depends on both compression and your change rate during the time travel window.

Does switching BigQuery storage pricing models affect performance or security?

No. Switching the storage billing model is a billing-only setting at the dataset level. It does not change how your data is stored logically, how queries run, or your security posture. The only change is how storage spend is calculated.

This makes it a low-risk optimization: you’re purely optimizing the billing calculation, not the underlying infrastructure.

How are Physical bytes calculated?

BigQuery stores columnar data using compression. The total Physical footprint of a table is roughly the sum of:

- Active physical bytes (current version)

- Long-term physical bytes (unchanged segments past the long-term threshold)

- Time travel physical bytes (previous versions retained during the time travel window)

- Fail-safe physical bytes (short retention for disaster recovery)

Your effective Physical billable bytes are the combination of these categories. Logical billing, by contrast, focuses on logical bytes for the current version (with long-term logic) and does not separately bill time travel/fail-safe.

Two factors can swing the comparison over time:

- The time travel window configuration for the dataset/table

- The number of changes to a table during the time travel period (more rewrites → more time travel bytes)

That’s why “set it and forget it” doesn’t work—you need up-to-date analysis as your data patterns evolve.

Learn about Rabbit’s optimization recommendations for BigQuery

How do you measure both storage pricing models to compare them?

You can query INFORMATION_SCHEMA.TABLE_STORAGE to retrieve both logical and physical bytes per table. Here’s a project-level query to compare storage models:

-- Replace ${project_id} with your actual project ID and ${region} with your region

SELECT

table_catalog AS project_id,

table_schema AS dataset_id,

table_name,

active_logical_bytes,

long_term_logical_bytes,

total_logical_bytes,

active_physical_bytes,

long_term_physical_bytes,

time_travel_physical_bytes,

fail_safe_physical_bytes,

(active_physical_bytes + long_term_physical_bytes + time_travel_physical_bytes + fail_safe_physical_bytes) AS total_physical_bytes,

SAFE_DIVIDE(

active_physical_bytes + long_term_physical_bytes + time_travel_physical_bytes + fail_safe_physical_bytes,

NULLIF(total_logical_bytes, 0)

) AS physical_over_logical_ratio

FROM `${project_id}.region-${region}.INFORMATION_SCHEMA.TABLE_STORAGE`

ORDER BY total_logical_bytes DESC;Note: To calculate the actual costs, you’ll need to multiply the bytes by the specific pricing rates for your region and billing model, including active and long-term storage rates. Find the current BigQuery storage pricing in the Google Cloud pricing documentation.

Why is manual calculation complicated in practice?

Several factors make manual analysis challenging:

- You must factor all Physical categories (active, long-term, time travel, fail-safe) to compare apples to apples.

- The time travel window and change rate can shift, meaning today’s answer might be different next month.

- Pricing rates vary by region and change over time.

These complexities mean you need a systematic approach to evaluate each dataset and monitor for changes over time. We’ll explore Rabbit’s out-of-the box insights and recommendations in a later section. First, let’s see how to change between storage pricing models.

How to change BigQuery storage pricing models?

Once you’ve identified which model is optimal for your dataset, implementation is straightforward using the bq CLI:

bq update -d --storage_billing_model=PHYSICAL PROJECT_ID:DATASET_ID

# Switch back if needed

bq update -d --storage_billing_model=LOGICAL PROJECT_ID:DATASET_IDImportant considerations:

- Changes typically take up to 24 hours to take effect

- You must wait 14 days between changes to the same dataset

This means you want to be confident in your analysis before making a change, since you can’t quickly reverse course if you get it wrong.

How to keep storage pricing at optimum as data patterns change?

Manual monitoring doesn’t scale well, especially with large numbers of datasets. As your data’s compression ratio, change rate, or time travel configuration evolves, the optimal model can flip—and you’d never know unless you’re constantly re-running your analysis manually.

Automation solves this problem. Rabbit can:

- Provide a clear, per-dataset and per-table view of Logical vs Physical, with effective compression ratios and time travel impact

- Give explicit recommendations of which model to use right now

- Send notifications when the recommendation changes (for example, if your change rate spikes during the time travel window or you adjust the window itself)

- Automatically create Git Pull Requests about the dataset pricing change if it is managed by Terraform

For teams managing dozens or hundreds of datasets, automated monitoring ensures you don’t miss savings opportunities or accidentally increase spend when data patterns shift.

For cloud storage optimization best practices, see our blog post:

The ultimate guide for GCP cost optimization - part 2 (BigQuery and Cloud Storage)

Automated BigQuery savings: Rabbit’s optimization recommendations

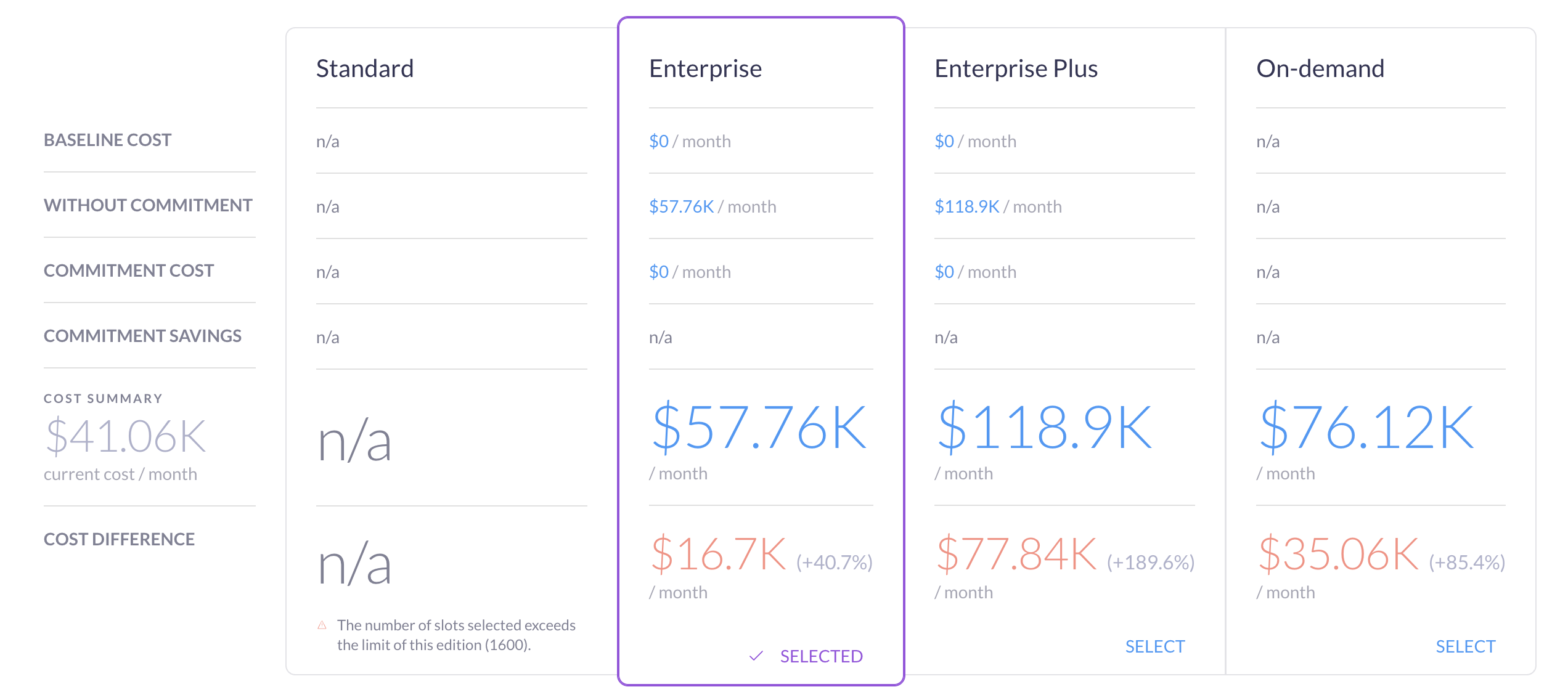

If you’re looking for a comprehensive solution, Rabbit provides continuous monitoring of your BigQuery storage with model-specific recommendations for each dataset. The platform surfaces exactly where switching models makes sense and estimates the savings before you make any changes.

Rabbit customers can also automate model changes using a customer-side tool. You define guardrails (eligible datasets, approval flows, maintenance windows), and the tool applies changes when recommendations are favorable. This closes the loop from insight to action without any manual effort.

Start a free trial or contact us to see your current opportunity and enable automatic updates.