BigQuery Capacity-based Pricing: How to Optimize Your Reservation Cost

Zoltan Guth

9 min read

Managing BigQuery reservation costs effectively has become critical for organizations looking to maximize their cloud investment. As companies scale their data operations, understanding how to optimize these costs can lead to significant savings without sacrificing performance.

This article explores proven strategies for reducing BigQuery expenses through smarter reservation management, from selecting appropriate pricing models to implementing dynamic slot allocation.

By following these optimization techniques, many organizations have achieved cost reductions of up to 35% while maintaining or even improving query performance. Let’s dive into the specific approaches you can implement to optimize your BigQuery reservation spending.

Choosing the Right BigQuery Pricing Model

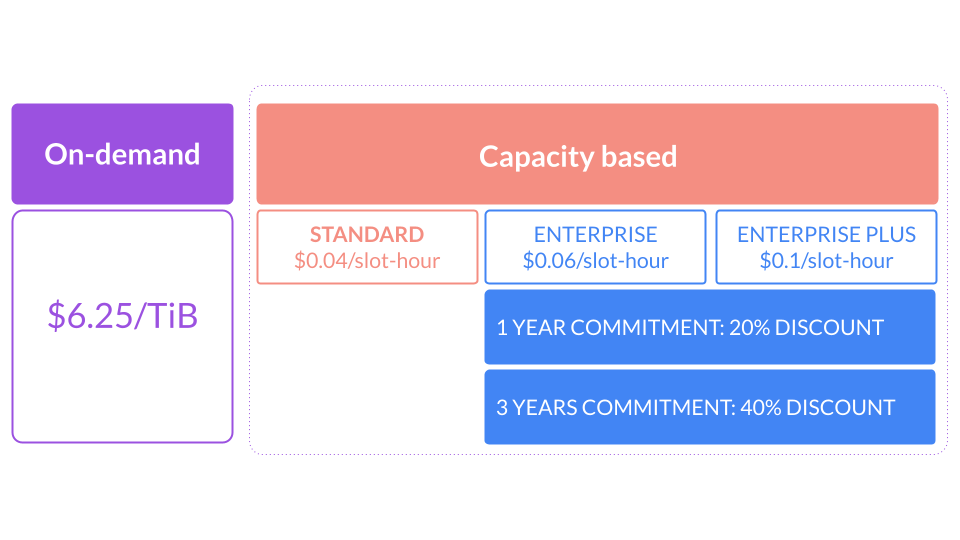

When considering cloud cost optimization and optimizing BigQuery reservation costs, it’s essential to first understand the available pricing models for compute-related expenses. BigQuery offers two main pricing options:

- On-Demand Pricing – You are charged based on the amount of data read by your queries.

- Capacity-Based Pricing – You pay for the slots consumed by your queries.

Based on the BigQuery documentation, slots are described as follows:

“A BigQuery slot is a virtual compute unit used to execute SQL queries or other job types. During query execution, BigQuery automatically determines the number of slots required. This depends on the data volume, query complexity, and available slots. More slots enable faster query execution and greater concurrency.”

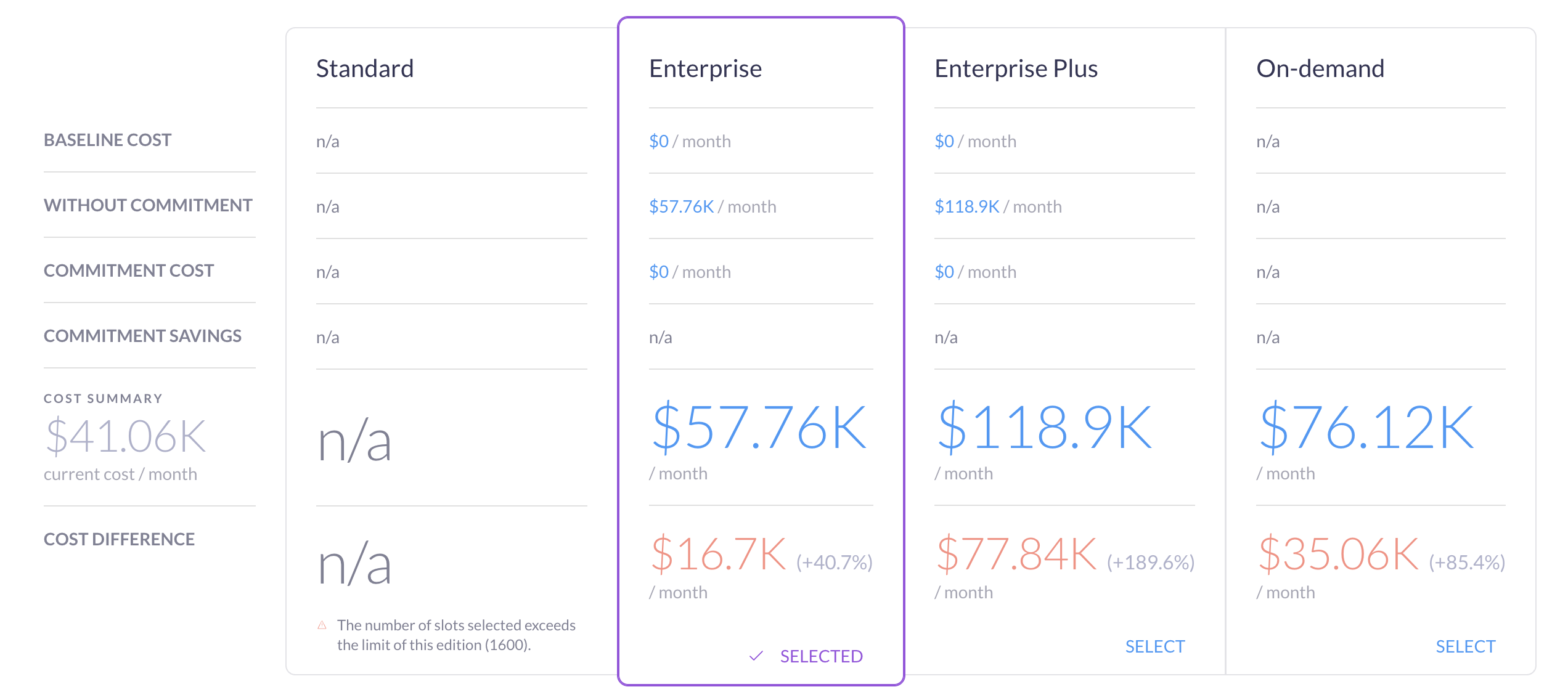

With capacity-based BigQuery pricing, you can create reservations and choose from three different editions, each with varying features and costs. Additionally, you can commit to slots for 1 or 3 years in exchange for a 20% or 40% discount, respectively.

If this blog is about optimizing reservation costs, why discuss on-demand pricing? Because there’s no universal rule that dictates which compute pricing model is best for a company. The optimal choice depends on your specific BigQuery usage patterns.

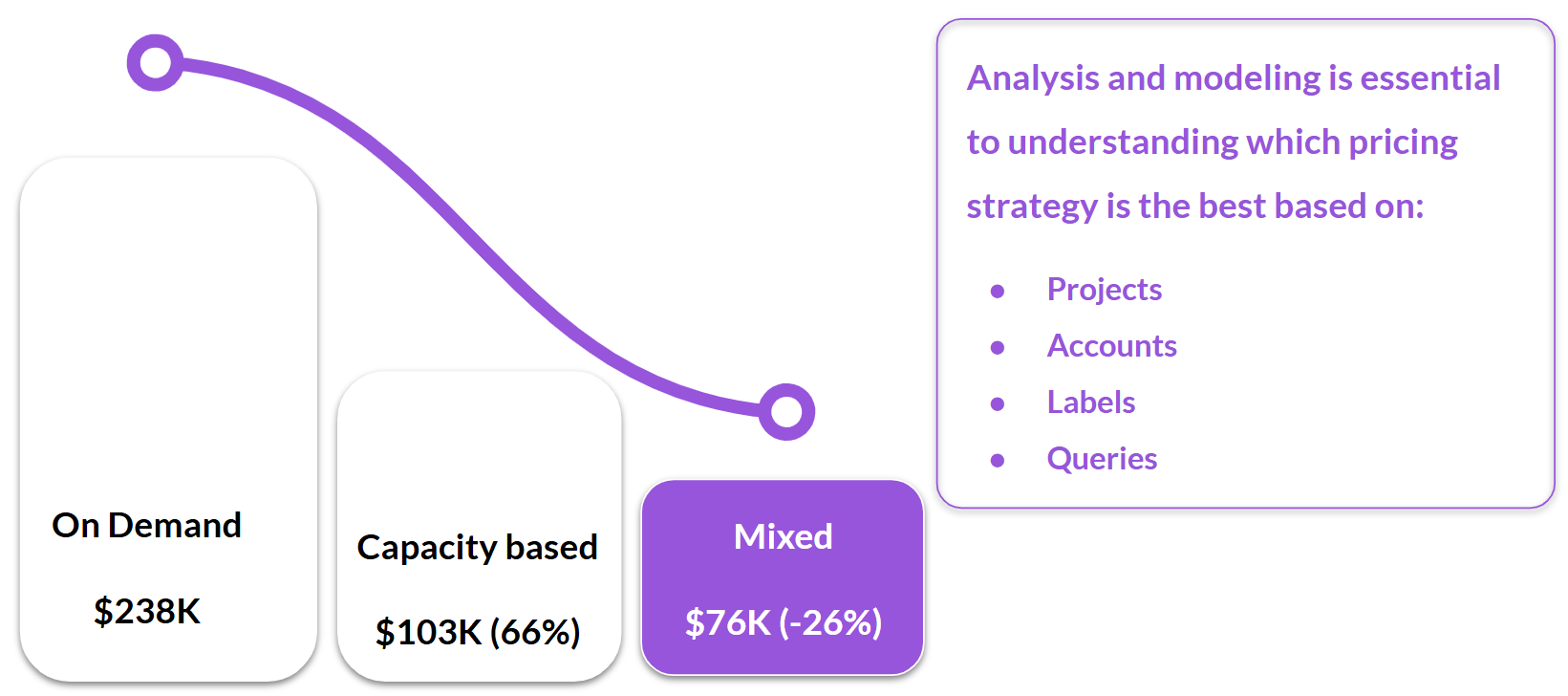

Consider a mid-size company’s BigQuery costs:

- If all jobs were on on-demand pricing, the monthly cost would be $238K.

- Switching entirely to capacity-based pricing would reduce costs to $103K—a 66% cloud cost reduction.

- However, a mixed model—evaluating each query and choosing the optimal pricing model—further lowers costs to $76K, an additional 26% BigQuery cost savings.

Many queries read only small amounts of data (low on-demand cost) but involve complex calculations that require many slots (high capacity cost), and vice versa. To maximize savings, analyze these differences at the query, account, label, or project level. While BigQuery reservations currently apply at the project level, restructuring recurring queries into separate projects can allow finer control over BigQuery pricing models.

Setting Maximum Slots Efficiently

Understanding BigQuery’s Autoscaler

BigQuery’s autoscaler adjusts slot allocation in increments of 50 and requires a minimum of 60 seconds of billing. This can lead to significant inefficiencies in BigQuery slot-based cost.

From our experience working with many clients, BigQuery lacks true slot autoscaling. Instead, it employs a capping mechanism that prevents jobs from exceeding the max slot setting. Adjusting this setting takes effect almost instantly—within 5 seconds—whether increasing or decreasing slots.

However, the “autoscaler” is responsible more for billing purposes that fill slot usage based on past usage with the 60-second minimum rule, often leading to wasted slot allocation. According to Google’s documentation:

“Any autoscaled capacity is retained for at least 60 seconds. This 60-second period is called the scale-down window. Any peak in capacity resets the scale-down window, treating the entire capacity level as a new grant.”

The Cost Impact of High Max Slot Settings

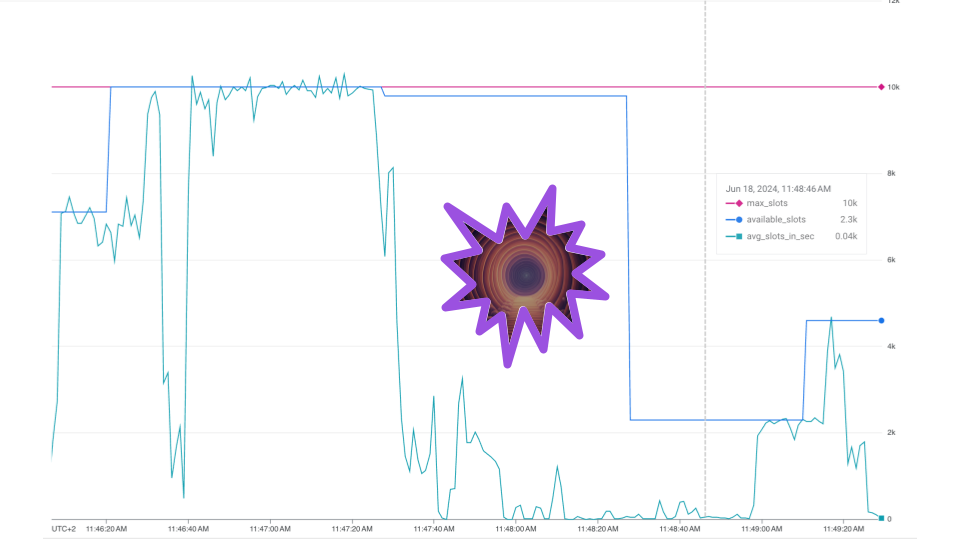

If max slots are set too high, queries will utilize them rapidly (if they can), leading to fast query execution. For instance, if a job utilises all of the max slots and runs for just 5 seconds, and no other jobs run within that minute, you may pay up to 12x more due to the 60-second minimum billing rule. The following diagram shows the autoscaler scaling up rapidly to accommodate the job, then keeping the slots high for 60 long seconds even though there is no other usage here.:

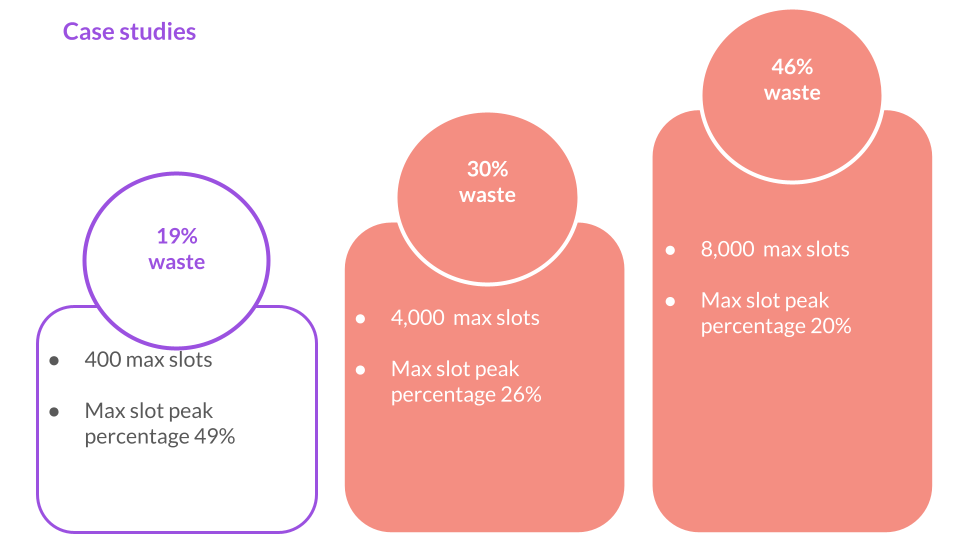

Our BigQuery slot analysis shows that inefficient slot usage can lead to 50% cost waste! The diagram below illustrates three different slot allocation profiles that we have identified from our customers:

- Aggressive tuning results in 19% waste which is usually acceptable for BigQuery reservation’s overhead.

- More conservative tuning sees 30%-48% slot waste, depending on performance requirements.

Google’s documentation suggests:

“Autoscaler is most efficient for heavy, long-running workloads, such as workloads with multiple concurrent queries. Avoid sending queries one at a time, since each query scales the reservation where it will remain scaled for a 1 minute minimum. If you continuously send queries, causing a constant workload, setting a baseline and buying a commitment provides constant capacity at a discounted price.”

The following rules help you to reduce inefficiencies within your reservations:

- Lowering max slots reduces BigQuery costs but may impact performance.

- Splitting reservations based on different workload requirements allows better cloud cost control.

- Adjusting slots dynamically at specific times (e.g., increasing before critical midnight jobs) can minimize wastage.

How Rabbit Automation Helps Optimize Costs

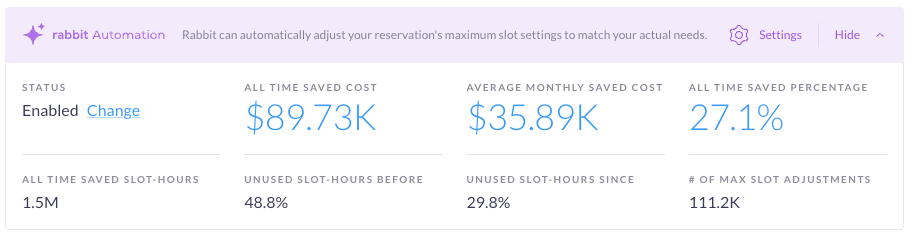

To address this challenge, Rabbit introduced an automation feature that dynamically adjusts max slot settings every second. This approach has reduced BigQuery reservation costs by up to 35% for clients—without requiring complex reservation splitting or manual slot adjustments.

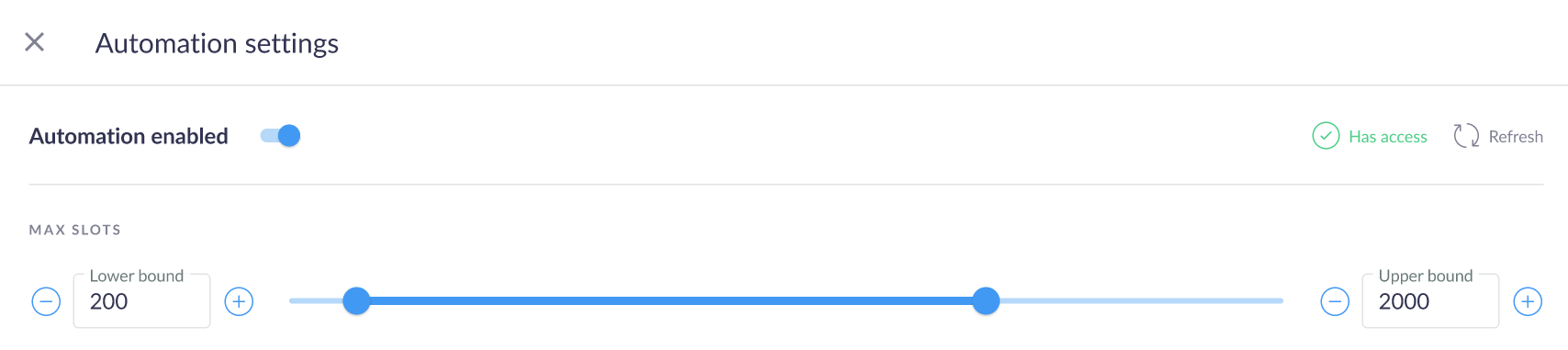

Rabbit continuously learns usage patterns and monitors max slot utilization in real-time to lower the BigQuery slot wastage every minute, but still maintain performance requirements. Users can define upper and lower slot limits, ensuring a minimum guaranteed capacity while preventing unnecessary slot overuse.

Identifying Unused Slot-Hours in Your Reservations

To analyze wasted slot-hours in your BigQuery reservations, use the SQL query below. Please replace the following placeholders accordingly:

[REGION]: The GCP region where your reservation is located. If you have multiple regions, run the query separately for each.

[RESERVATION_GCP_PROJECT]: The GCP project ID where the reservation is hosted. If you have multiple projects, execute the query for each one individually.

Check the required permissions to execute the query here:

https://cloud.google.com/bigquery/docs/information-schema-jobs-timeline-by-organization#required_permissions

WITH

intervals AS (

SELECT

TIMESTAMP_ADD(TIMESTAMP_SUB(TIMESTAMP_TRUNC(CURRENT_TIMESTAMP(), MINUTE), INTERVAL 30*24*60 MINUTE), INTERVAL i MINUTE) interval_start,

TIMESTAMP_ADD(TIMESTAMP_SUB(TIMESTAMP_TRUNC(CURRENT_TIMESTAMP(), MINUTE), INTERVAL 30*24*60 MINUTE), INTERVAL i+1 MINUTE) interval_end

FROM

UNNEST(GENERATE_ARRAY(0, (30*24*60))) i

),

jobs_info AS (

SELECT

reservation_id,

TIMESTAMP_TRUNC(period_start, MINUTE) AS period_start,

SUM(period_slot_ms) / 1000 / 60 AS period_slots,

FROM

`region-[REGION]`.INFORMATION_SCHEMA.JOBS_TIMELINE_BY_ORGANIZATION

WHERE

period_start >= TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL 30*24*60 MINUTE)

AND job_creation_time >= TIMESTAMP_SUB(CURRENT_TIMESTAMP(), INTERVAL (30*24*60 + 6) MINUTE)

AND job_type = 'QUERY'

AND (statement_type != "SCRIPT"

OR statement_type IS NULL)

GROUP BY

1, 2

),

autoscale AS (

SELECT

CONCAT(project_id, ":[REGION].", reservation_name) AS reservation_id,

TIMESTAMP_TRUNC(change_timestamp, MINUTE) AS valid_from,

IFNULL(LEAD(TIMESTAMP_TRUNC(change_timestamp, MINUTE)) OVER(PARTITION BY project_id, reservation_name ORDER BY change_timestamp), CURRENT_TIMESTAMP())AS valid_until,

autoscale.current_slots,

autoscale.max_slots,

slot_capacity

FROM

`[RESERVATION_GCP_PROJECT].region-[REGION]`.INFORMATION_SCHEMA.RESERVATION_CHANGES

WHERE

autoscale.current_slots IS NOT NULL

)

SELECT

j.reservation_id,

SUM(IFNULL(j.period_slots,0)) / 60 AS used_slot_hours,

SUM((a.slot_capacity + a.current_slots)) / 60 AS available_slot_hours,

FROM

intervals i

LEFT OUTER JOIN jobs_info j ON j.period_start = i.interval_start

JOIN autoscale a ON

j.reservation_id = a.reservation_id

AND ((a.valid_from <= i.interval_start AND i.interval_start < a.valid_until)

OR (i.interval_start < a.valid_from AND A.valid_from < i.interval_end))

GROUP BY reservation_id

Maximizing Commitment Savings

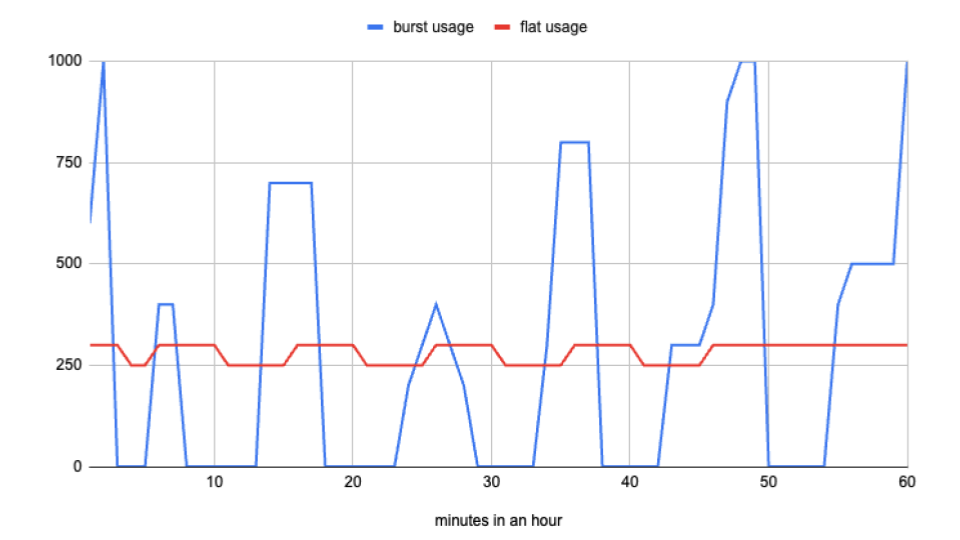

In the diagram below, you can see two theoretical usage patterns: one represents bursty usage, while the other shows flat usage. Assuming the same usage continues for the entire month, the total BigQuery cost without any commitment would be $12.1K, as both scenarios consume the same number of slot-hours.

However, for the bursty usage pattern, even with the smallest possible 50-slot commitment, the cost would actually increase by 6% to $12.8K, making commitment not worthwhile in this case. On the other hand, for the flat usage pattern, you could theoretically commit to 250 slots for a 1-year term, reducing BigQuery costs by 18% to $10K.

This highlights the importance of analyzing BigQuery slot usage on a per-second or per-minute basis to identify peak periods and flatten usage wherever possible from a business perspective.

For example, imagine you have 10 different pipelines, each requiring 100 slots for 6 minutes. The business requirement is to update the data once per hour for each of those pipelines. If all pipelines start at the same time (e.g., at the 0th minute of each hour), your usage spikes to 1,000 slots for 6 minutes, followed by 54 minutes of inactivity.

Now, consider an alternative approach: instead of running all pipelines simultaneously, you stagger their start times by 6 minutes each. The first pipeline starts at 0 minutes, the second at 6 minutes, the third at 12 minutes, and so on. With this simple adjustment, you still meet the business requirement—all pipelines update data once per hour—but now your slot usage is evenly spread at 100 slots throughout the entire hour.

This flattened usage pattern makes it possible to take advantage of commitment-based discounts—20% for a 1-year commitment or 40% for a 3-year commitment—leading to significant cloud cost savings.

Conclusion

Optimizing BigQuery reservations requires a multi-faceted approach, including:

- Choosing between on-demand and capacity-based pricing based on usage patterns.

- Analyzing query-level costs to determine the best pricing model.

- Managing max slots efficiently to minimize wasted slot-hours.

- Flattening workloads to maximize commitment savings.

- Leveraging optimization automation tools like Rabbit for real-time slot adjustments.

By following these strategies, companies can achieve significant cloud cost savings while maintaining high query performance.